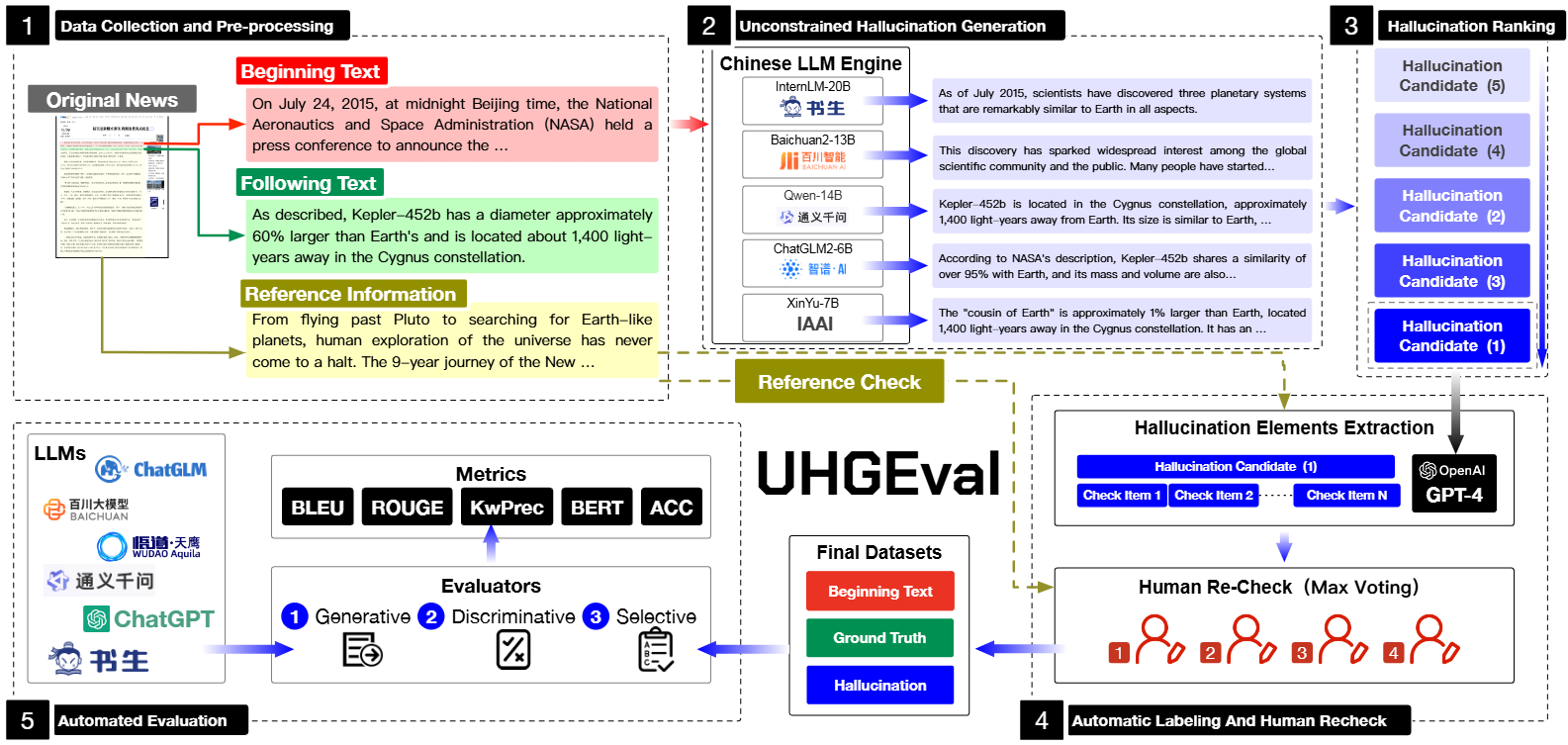

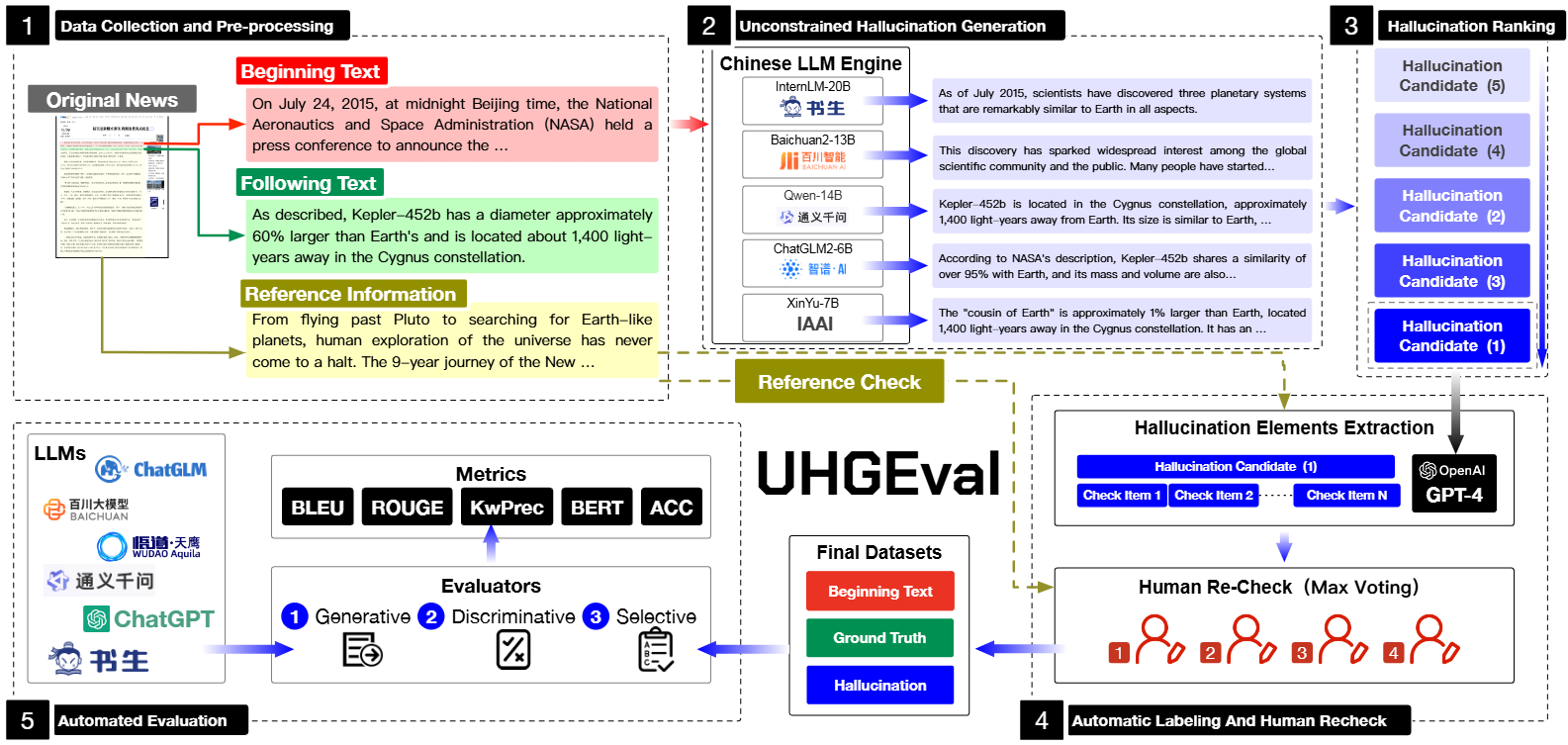

Large language models (LLMs) produce hallucinated text, compromising their practical utility in professional contexts. To assess the reliability of LLMs, numerous initiatives have developed benchmark evaluations for hallucination phenomena. However, they often employ constrained generation techniques to produce the evaluation dataset due to cost and time limitations. For instance, this may involve employing directed hallucination induction or deliberately modifying authentic text to generate hallucinations. These are not congruent with the unrestricted text generation demanded by real-world applications. Furthermore, a well-established Chinese-language dataset dedicated to the evaluation of hallucinations is presently lacking. Consequently, we have developed an \( \mathbb{U} \)nconstrained \( \mathbb{H} \)allucination \( \mathbb{G} \)eneration Evaluation (\( \mathbb{UHG} \)Eval), containing hallucinations generated by LLMs with minimal restrictions. Concurrently, we have established a comprehensive benchmark evaluation framework to aid subsequent researchers in undertaking scalable and reproducible experiments. We have also evaluated prominent Chinese LLMs and the GPT series models to derive insights regarding hallucination.

@article{UHGEval,

title={UHGEval: Benchmarking the Hallucination of Chinese Large Language Models via Unconstrained Generation},

author={Xun Liang and Shichao Song and Simin Niu and Zhiyu Li and Feiyu Xiong and Bo Tang and Zhaohui Wy and Dawei He and Peng Cheng and Zhonghao Wang and Haiying Deng},

journal={arXiv preprint arXiv:2311.15296},

year={2023},

}